100 new requests in Node.js while still serving the first one

Node.js has only one thread processing JavaScript code. What happens if a request comes in, and a file needs to be read from the disk, and in the meantime, there are 100 new incoming connections?

Wouldn't the file read callback have to wait until all the other connections are served?

Well, yes, the callback would have to wait for other events to be processed first. But, as we'll see, it's all very ok.

Event-driven vs. dedicated thread per request model

The origin of this concern usually stems from the dedicated thread per request model found in traditional web servers. It steers you away from thinking how Node.js actually works, which is by interleaving small events.

The dedicated thread per request model is the way Apache, Tomcat, IIS, and many other web servers or web application containers work. In these servers, there is a predetermined amount of workers that handle every incoming request. Each worker takes a single request and serves it completely from start to finish. It is responsible for reading the resources it needs, waiting for the reads to complete, doing any processing, and returning a result. Incoming requests wait until a worker is ready to serve them.

Node.js works differently. All requests are taken in as they arrive. Instead of having multiple workers serving many requests, there is only one thread serving every request. Each request gets the whole thread for itself for the time it's being served. The catch is that as soon as some resource has to be waited on, you let go of the thread and allow others to use it. This is another way of saying Node.js performs I/O in an asynchronous and non-blocking way.

Serving a request is split into small chunks

Processing each request until some resource has to be waited on splits the processing into small pieces. The code executes until an asynchronous operation needs to be performed. This could be, for example, parsing JSON and if-then-elsing business logic rules until a row needs to be read a database. The asynchronous operation is set up, provided with a means to continue and pass its results later on, and then the thread is let go for others to use.

During waiting time, Node.js allows other requests to proceed. And similarly, the next request proceeds until it needs to wait on something. This continues until the results for the original operation are in, and the only thread is free for it to continue again and process the results.

The means to an operation to be continued later is to use a function argument known as a callback. The name originates from the fact that it will be called at some point in the future when the results are in.

Small chunks from different requests interleave

The end result is that the short bursts of processing interleave across all requests. Every request advance a tiny bit at a time. While one request is waiting for something, others can be advanced until they yet wait for something.

This is the way a healthy Node.js server works. It keeps pumping life into the application by advancing individual computations.

Going back to the original question, yes, the file read waits for other requests to be handled. The code handling each of the 100 new connections is most likely very short in length and goes quickly into reading or waiting for a resource. The cost of this is minimal, and it works well. Processing requests this way gives the likes of Node.js and nginx an edge and allows serving large loads efficiently.

Resources

You can find an example Express.js application with debug prints receiving 100 incoming requests at this github repo.

You can find information on how Node.js orchestrates all this in "Event loop from 10,000ft - core concept behind Node.js". You can find more information about why it's important to let others use the only available thread in "Let asynchronous I/O happen by returning control back to the event loop". You can find more on asynchronous calls in Blocking and non-blocking calls in Node.js

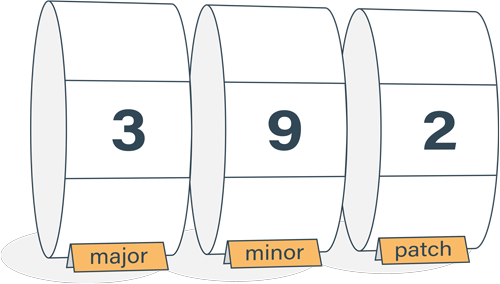

Semantic Versioning Cheatsheet

Learn the difference between caret (^) and tilde (~) in package.json.